This is the multi-page printable view of this section. Click here to print.

Overview

1 - Why LLMariner?

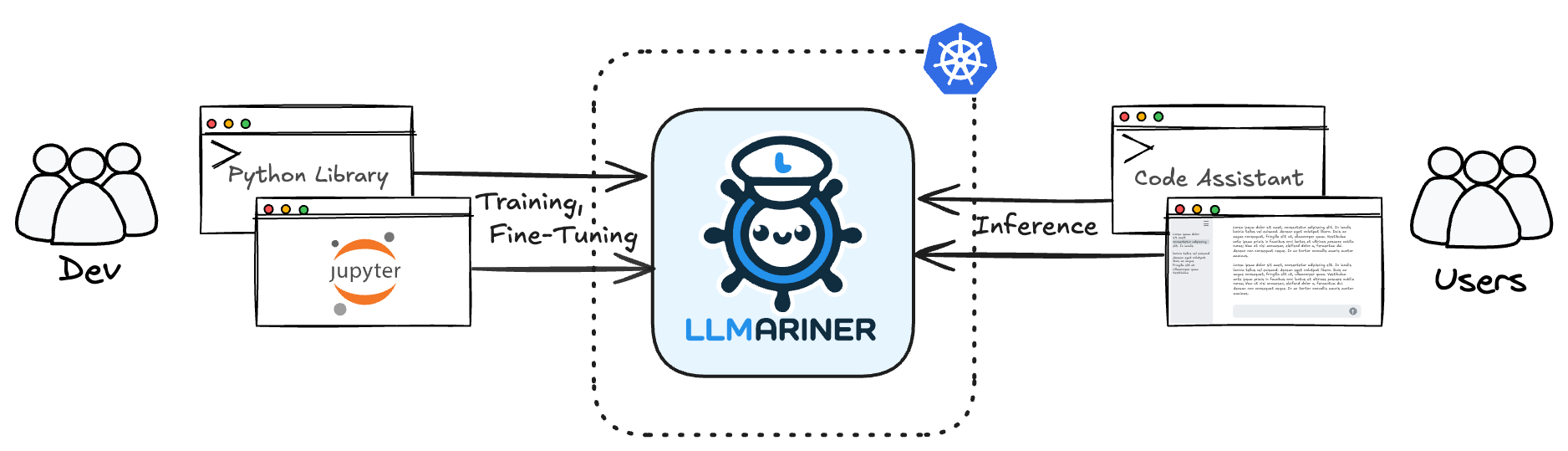

LLMariner (= LLM + Mariner) is an extensible open source platform to simplify the management of generative AI workloads. Built on Kubernetes, it enables you to efficiently handle both training and inference data within your own clusters. With OpenAI-compatible APIs, LLMariner leverages ecosystem of tools, facilitating seamless integration for a wide range of AI-driven applications.

Why You Need LLMariner, and What It Can Do for You

As generative AI becomes more integral to business operations, a platform that can manage their lifecycle from data management to deployment is essential. LLMariner offers a unified solution that enables users to:

- Centralize Model Management: Manage data, resources, and AI model lifecycles all in one place, reducing the overhead of fragmented systems.

- Utilize an Existing Ecosystem: LLMariner’s OpenAI-compatible APIs make it easy to integrate with popular AI tools, such as assistant web UIs, code generation tools, and more.

- Optimize Resource Utilization: Its Kubernetes-based architecture enables efficient scaling and resource management in response to user demands.

Why Choose LLMariner

LLMariner stands out with its focus on extensibility, compatibility, and scalability:

- Open Ecosystem: By aligning with OpenAI’s API standards, LLMariner allows you to use a vast array of tools, enabling diverse use cases from conversational AI to intelligent code assistance.

- Kubernetes-Powered Scalability: Leveraging Kubernetes ensures that LLMariner remains efficient, scalable, and adaptable to changing resource demands, making it suitable for teams of any size.

- Customizable and Extensible: Built with openness in mind, LLMariner can be customized to fit specific workflows, empowering you to build upon its core for unique applications.

What’s Next

- Take a look at High-Level Architecture

- Take a look at LLMariner core Features

- Ready to Get Started?

2 - High-Level Architecture

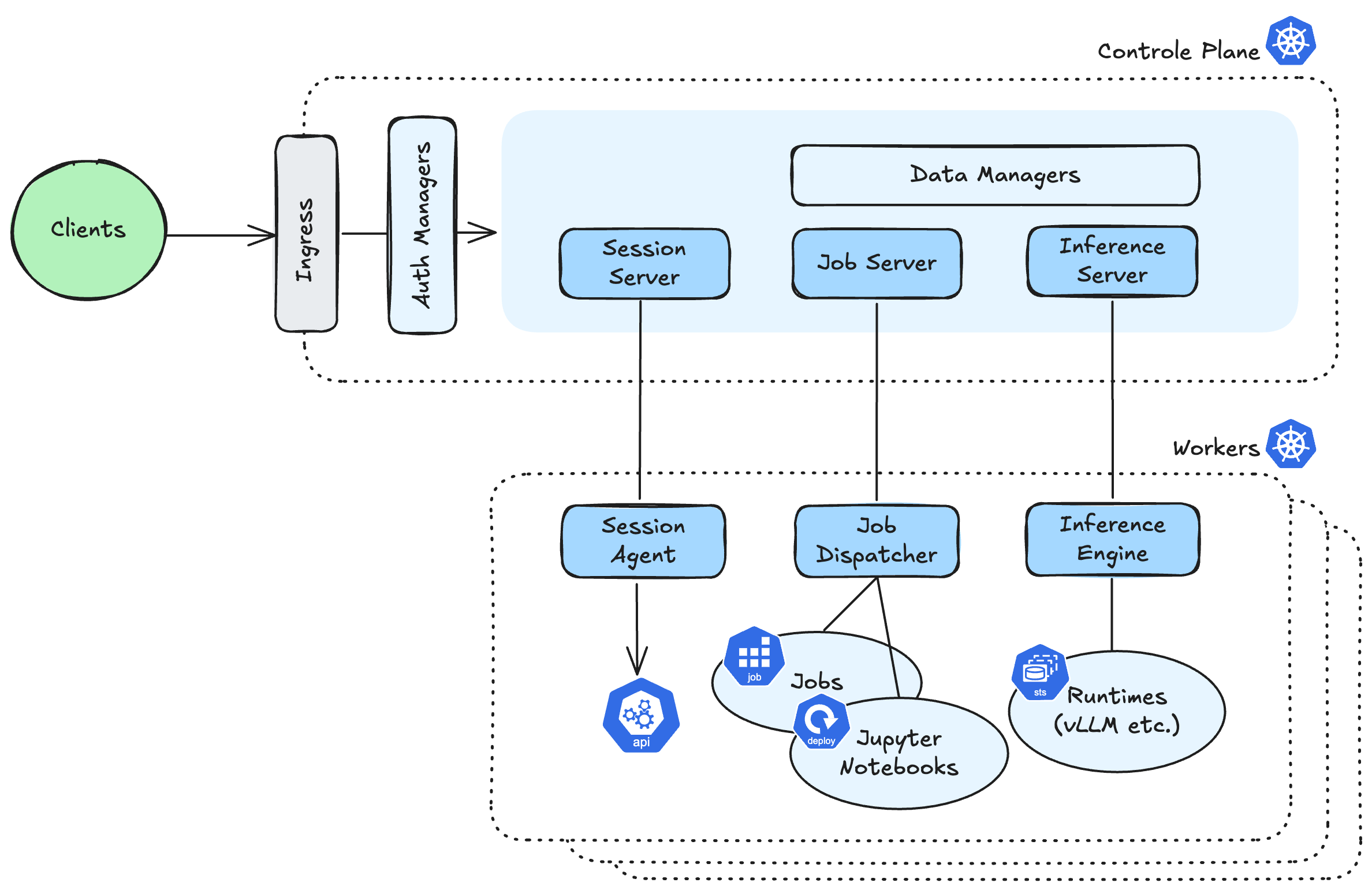

This page provides a high-level overview of the essential components that make up a LLMariner:

Overall Design

LLMariner consists of a control-plane and one or more worker-planes:

- Control-Plane components

- Expose the OpenAI-compatible APIs and manage the overall state of LLMariner and receive a request from the client.

- Worker-Plane components

- Run every worker cluster, process tasks using compute resources such as GPUs in response to requests from the control-plane.

Core Components

Here’s a brief overview of the main components:

- Inference Manager

- Manage inference runtimes (e.g., vLLM and Ollama) in containers, load models, and process requests. Also, auto-scale runtimes based on the number of in-flight requests.

- Job Manager

- Run fine-tuning or training jobs based on requests, and launch Jupyter Notebooks.

- Session Manager

- Forwards requests from the client to the worker cluster that need the Kubernetes API, like displaying Job logs.

- Data Managers

- Manage models, files, and vector data for RAG.

- Auth Managers

- Manage information such as users, organizations, and clusters, and perform authentication and role-based access control for API requests.

What’s Next

- Ready to Get Started?

- Take a look at the Technical Details

- Take a look at LLMariner core Features